Bluetooth & Wi-Fi Interoperability Testing: Building Future-Proofed Connected Products

Long after they were first introduced, Bluetooth and Wi-Fi continue to advance. In providing a real-world solution to the problem of tangled Ethernet, audio and USB cables, they have helped to spark a huge growth in connected product development that has, as a consequence, created new challenges for QA and engineering departments.

The pressure of hitting go-to-market deadlines has never been higher, because the competition to produce quality products for consumers has never been greater.

The Bluetooth Special Interest Group (SIG) experienced a 70% growth in membership between 2013 and 2018. At an 8% compound annual growth rate, Bluetooth device shipments are set to surge from 5.4 billion devices in 2024 to 7.5 billion by 2028.

And because the amount of devices being shipped is at an all-time high, so too are the stakes involved with every release. Product recalls are a PR disaster. So today testing defines the success of every new release - and with it a manufacturer’s reputation.

Building future-proofed connected products is the guaranteed way to get customers to build a long-term relationship with your business.

Understanding the drivers of Bluetooth & Wi-Fi growth

Both Bluetooth and Wi-Fi continue to advance because the common use cases are now embedded in our everyday lives and expectations.

- Audio streaming (such as wireless headsets, wireless speakers and in-car systems)

- Data transfer (such as wearable devices, healthcare technology and computer peripherals)

- Location services (such as item finding, asset tracking, wayfinding and point of interest information)

- Device and sensor networks (such as control, monitoring and automation systems)

- Smart home devices (such as televisions, speakers, thermostats, home security, lighting and voice assistants)

However, both Bluetooth and Wi-Fi devices operate on the same 2.4 GHz ISM band, so they need to coexist without creating problems for each other. And because Bluetooth and Wi-Fi are designed to provide an alternative to traditional cabling, successful interoperability is essential. If you are building connected products, extensive interoperability testing is vital to your business’s long-term prospects.

Five reasons Bluetooth and Wi-Fi interoperability testing is crucial

- Certifying products/devices to recognised qualification standards.

- Avoiding post-release defects and PR disasters that can have dramatic impacts on brand perception.

- Upholding product and brand reputation, which is developed over decades but can be lost in a matter of weeks.

- Reducing customer service complaints and increasing end-user satisfaction levels.

- Ensuring interoperability – the core promise of Bluetooth and Wi-Fi devices – is seamlessly delivered without hassle or impediment.

Interoperability Issues in Consumer Products

Over 20 years, Bluetooth and Wi-Fi have made substantial progress. Yet several challenges remain in sight.

Despite big improvements in compatibility, power demands and range, Bluetooth interoperability issues are longstanding and well known. An article from Business Insider in 2018 conveyed the frustration that some users continue to feel.

Resolving these feelings of frustration is only possible with targeted action. So which, precisely, are the most common issues at play?

1. Device range

The operable range of Bluetooth connections varies between devices.

2. Inconsistent profile support

Not every device supports Bluetooth in the same way. Spotting and rectifying causes of interoperability problems can help to eliminate end-user frustrations.

3. Device pairing, usability and connection issues

Initial pairing and establishing a connection is the first step in any Bluetooth connection process. If this is not reliable, a product is fatally flawed.

4. Different compliance standards

Both Bluetooth and Wi-Fi standards have been maturing for 20 years. Bluetooth standards have been through at least six major iterations; Wi-Fi standards even more. Trying to connect devices operating to different standards can cause difficulties. This lies at the root of many interoperability issues.

5. Slow data transfer at the extremes of range

Data rates drop as distance increases. However, it is important to remember this is a relative issue. Bluetooth 5 / LE transfer speeds of 1-2mbps may be slow compared to Wi-Fi or future iterations of Bluetooth, but if they are fast enough to do what is needed then this quickly becomes a side issue from a user perspective.

6. Poor audio quality

Audio quality is a function of factors relating to the distance between products, interference, and coexistence. When Wi-Fi and Bluetooth are used simultaneously, a device may struggle to meet the parallel demands. For example, a phone might be unable to process a Wi-Fi download while streaming audio Bluetooth content without interruption. The result can be a break-up of the audio stream, either with what sounds like interference, gaps or pauses, or a complete halt of the audio.

7. Differing frequency bands

Wi-Fi frequency bands differ from region to region. The location a device is set to is an important factor that many users fail to consider. If you import a laptop from North America to Europe, you may struggle to get optimal Wi-Fi performance simply because the global standards are different.

Bluetooth and Wi-Fi Interoperability: What Should You Test?

Understanding the three main levels of testing will help to organise your efforts and ensure your consumer product is ready for release.

Technical standards

Your product will need to comply with the technical standards in order to be able to use Bluetooth and Wi-Fi branding and logos. The implementation will need to comply with relevant standards and a certification lab will often be needed to make sure the design is compliant.

Device certification

Securing device certification relies on your product passing the current standards and tested using the Bluetooth Profile Tuning Suite (PTS) or Wi-Fi Test Suite. For Bluetooth, you will need to test at both controller and host level, including verification of forward error connection (FEC) and middle layer protocols. The PTS is designed to highlight issues for resolution and emulate invalid behaviours.

For Wi-Fi connections, Wi-Fi Test Suite uses seven different components to execute test cases. Typical areas for examination include device and service discovery, connection stability, application issues, timing and transmitter power. Once you’ve proven that your device complies with the standard requirements, you can use the Bluetooth or Wi-Fi logo on your packaging and marketing material.

Interoperability

If you are meeting device certification standards, theoretically your device should not present any problems to its users. But real-world experiences are the only genuine moment of truth. Making sure your device works not just on paper, but in the hands of your customers, is the ultimate test.

This ensures your device does what it ought to do and behaves how the end user expects. Real-world uses can be jeopardised by subtle interpretations during your certification testing. These can have unexpected effects, so your product needs to be tested with other third-party devices already on the market to ensure it functions correctly. Take time to ensure the devices you test your product with are correct in both a recency and geographical context.

Researching devices is critical to success. For example, some devices are unique to China, India, North America or Latin America. So selecting the correct devices to test with your product will depend on where your product is destined. You must work to ensure your product has been thoroughly tested for interoperability with other products that will be expected to connect and work with it.

How to Complete Bluetooth and Wi-Fi Interoperability Tests

Focus on the fundamentals: does your device work as your user expects? The starting point for all interoperability testing must always be the end-user experience.

It is crucial to first take a step back from protocols and assess the bigger picture. Does the device functionality work at a user level? Testing should aim to emulate the precise end-user experience and any environmental changes that may occur while the device is in operation. Only if there is a problem should we use protocol or packet analysers to start to determine its specific nature.

Common Testing tools

Bluetooth Protocol analyser (Bluetooth LE / Bluetooth Classic)

Protocol testers or RF sniffers are crucial. They monitor and store all the individual communications happening between devices. So if an issue occurs, you can quickly use the log or ‘air trace’ to determine where the fault is and how best to debug.

IP Packet analysers (Wi-Fi)

Wi-Fi packet analysers are similar to Bluetooth protocol analysers, capturing and revealing what is happening at the lowest level so you can quickly resolve network, software and communications troublespots. Wireshark and OmniPoint are two leading examples of IP packet analysers.

Manual vs Automated Bluetooth and Wi-Fi Interoperability Testing

We’ve talked about manual testing being quick and easy to set up and, after all, being human is a great qualification for testing like a human!

Manual testing is often seen as a natural fit for end-user testing. But where manual interoperability testing breaks down is in testing complex scenarios. When you’re testing the entire functionality of a product, you must break down the number of variables in order to test things sequentially.

With automated testing, you can test many more variables and get much more complex testing achieved than you could with a manual process. You also have the ability to dive straight into the debugging because many automated test platforms capture all logs and air traces as testing happens.

You can integrate low-level debugging capabilities into your interoperability testing and do it all at once. Your test platform can capture everything that’s happening, without having to do separate debug testing or repeat testing for analysis after you find a fault.

The Pros and Cons of Manual Testing

Pros

Manual testing allows you to truly test your product from an end-user’s experience. Test engineers go through the same process of pairing devices through menus that consumers will face once the product is launched. This generates a deep understanding of any potential issues they will face.

Manual testing also has other inherent plus points. It is speedy to roll out, allows for quick exploration of unexpected results and is ideal if you want to run a fast ‘smoke’ test to establish if previous issues have been resolved.

Cons

While appropriate for some circumstances, there are also indisputable challenges associated with manual testing.

Test engineer resource required scales linearly with test loads.  It requires significant investment in agile test specialists and is normally only operable during working hours.

It requires significant investment in agile test specialists and is normally only operable during working hours.

Deeper issues can often be overlooked, because it is impossible to fully document and reliably reproduce every scenario with a limited manual resource operating under strict time pressures.

Manual testing also makes it harder to ensure consistency in testing, making it much more difficult to confidently compare and analyse test results done at different times or in different locations.

The Pros and Cons of Automated Testing

Pros

Automated testing should not merely be a repeat of your manual testing. Automation can stress hardware in different ways to test features more dynamically. For example, take into account the power requirements over time. Battery level can affect performance, so running a series of automated tests at different power levels can produce results that reveal real performance changes in different power scenarios.

Consideration needs to be given to automated testing of battery-powered devices. Take, for example, a headphone device controlled by physically tapping the headset to go to the next or previous track. If you keep testing the scenario as power drops, performance may change and eventually the battery will deplete. The goal, therefore, if you are using automation testing on a battery-powered product for a long period of time is to interleave the tests with a charging cycle to ensure that the test continues to run.

Automated testing quickly relieves the strain on test engineering and QA teams, allowing for more extensive test scenarios and 24/7 operation. This means more tests can be completed in a given timeframe, with greater test coverage and lower costs-per-test providing significant benefits.

Tightly-defined test processes mean teams follow strictly controlled, consolidated processes, removing market and location differences and allowing for easy comparisons between all test results – regardless of when and where they were generated.

Cons

If the business is new to automated testing, it will need to factor early set-up costs in deploying an automated testing platform and staff training.

The costs of recruiting or re-skilling engineers should not be underestimated. You may also have to invest time and energy into redesigning your internal testing processes.

The cost of this training is not equal among automated platforms, with some systems offering visual interfaces and no code solutions.

So the ease of use and scalability of an automated platform should be considered as part of the selection process.

Top Issues That Manual Testing Can Miss

There are some real world examples of how automated testing can uncover hidden issues that may be missed by manual testing.

Coexistence issues are an example where manual testing may not uncover all problems. Coexistence issues may occur where the mobile phone shares an antenna between Bluetooth and Wi-Fi, or the receivers and transmitters are physically close enough that one transmitter saturates the other receiver, causing gaps in received transmissions. If the transmit power is high enough, the saturation can occur even if the frequencies are different (i.e., 2.4GHz and 5GHz).

Bluetooth and Wi-Fi coexistence is implemented automatically in most products. The principle is that the loss of either Wi-Fi or Bluetooth for a small period is acceptable. The devices are often designed to prioritise one over the other, for example during pairing or discovery. The devices communicate and agree whether Bluetooth or Wi-Fi should be the higher priority and which will get precedence. Setting these priorities is a complex process and some implementations perform better than others.

Bluetooth and Wi-Fi coexistence is implemented automatically in most products. The principle is that the loss of either Wi-Fi or Bluetooth for a small period is acceptable. The devices are often designed to prioritise one over the other, for example during pairing or discovery. The devices communicate and agree whether Bluetooth or Wi-Fi should be the higher priority and which will get precedence. Setting these priorities is a complex process and some implementations perform better than others.

In practice, however, automated testing may help to uncover scenarios where Bluetooth discovery failures may unexpectedly be encountered during pairing because Wi-Fi is already enabled by the end user and heavily loaded (e.g. when a firmware update is in process or the user has enabled an active hotspot). Automation uncovers the issues by testing these complex use cases simultaneously.

Hidden data retransmissions

Bear in mind that transmitted data may not permanently fail to be sent, but can be retransmitted until it arrives successfully. This can lead to a manual test passing without apparent issues while an underlying issue still exists.

Audio may be present, for example, but it may suffer occasional short dropouts which are easy to miss when manually testing. Data transmission protocols ensure data is transmitted until the target devices successfully receive them. However, external interference may cause data to be transferred multiple times - slowing data rates and potentially causing issues which may not be detected. These may produce manual test passes where the test case is marginal but the operation is successful.

In the real world, the user may find the exact same use case unexpectedly fails where it previously passed on the test bench. Automated testing can detect these marginal conditions and highlight where successive retransmissions are occuring, allowing for a more robust handling of data exchange in less than optimal or marginal conditions.

How bugs manifest

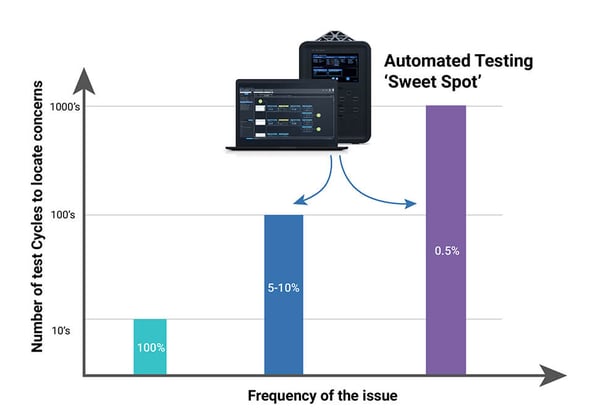

When issues have a high occurrence rate (i.e., they fail all or most of the time), manual testing should highlight these failures easily since the issue will be seen when doing a low number of repetitions and should be reproducible easily.

However, in more marginal conditions where failures occur less frequently, the manual test process may not see these failures. These are circumstances in which automated testing and repetition can provide deeper insights into these lower failure rate issues.

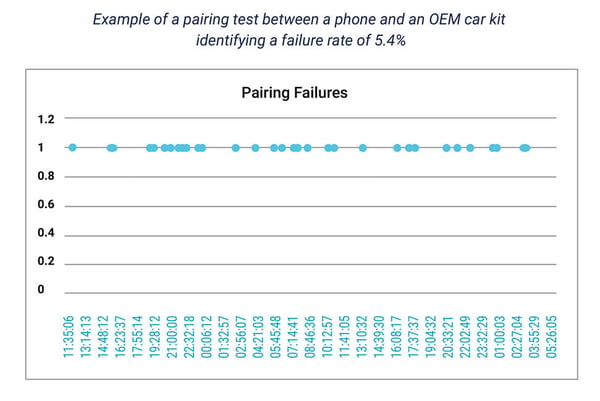

The following illustrations show the ‘sweet spot’ for automation highlighting cases where the test fails occasionally over time. In each case, there can be a variance in behaviours caused by external factors which can impact user experiences. In the second example, the time to connect can vary from an average of around two seconds to 13.5 seconds at extremes.

Six Strategies to Improve Device Testing

What are the best approaches to ensure your testing programmes are robust, cost-effective and reliable?

1. Embrace automation

Advances in technology create challenges as well as opportunities. As complexity rises in Bluetooth use cases, such as Mesh networks with multiple nodes, automation is the only way to reliably test.

Consider, for example, 100 Bluetooth Mesh controlled lights in a warehouse or industrial setting. It is simply beyond the scope of manual testing to ensure each light node works and behaves as it should. An automation tool is the only appropriate solution to test performance before deployment.

It is simply a matter of scale. When the permutations expand, test automation moves from ‘nice-to-have’ to being business critical.

Imagine another scenario, such as Bluetooth LE broadcasting audio to visitors passing through an airport duty free store. One visitor wants to listen to offers on cosmetics, another to electronics, and so on. Automation is the only practical way to test complex simultaneous user scenarios and sets of capabilities.

2. Test the right devices

The success of your interoperability testing relies on the library of devices with which you test your own product. It's important to make sure you are testing the right devices. But developing an appropriate device library only occurs when you invest in thorough market research to select the correct devices with which to test. You must understand the most appropriate in-market devices to test, factoring in considerations such as when devices launch to market and the regional availability.

3. Invest in tools

The logs and traces from analysis tools can help you to pinpoint problems quickly and easily. You will also need a way to store and manage the data you gather for analysis. However, tools alone will not resolve any issues you encounter, as analysis of the results and comparison of different data sets to pinpoint root cause is essential.

Automation platforms can reduce this burden by providing detailed multi-source logging that highlights key data points and enables fast analysis. Automation platforms also free test engineer resources to focus on this analysis work rather than conducting the tests.

You should proactively invest in training to maintain and enhance your team’s knowledge and insight into the latest technical standards. This analysis level is key to debugging products.

4. Consider the product lifecycle

A phone, for example, might have a typical product lifecycle of two years, while a car’s infotainment system might be more than five years. So testing only at product launch is never enough.

During your product’s lifecycle, new devices will enter the market. It is important to develop an ongoing in-market testing strategy to remain compatible as the market composition evolves, and this will often be closely tied into an appropriate firmware update strategy that ensures ongoing performance over the product lifetime.

Forward investing in your product in this way will ensure it remains compatible over its lifetime and help to both build customer loyalty and prevent costly reputational impacts.

5. Tell your users how good your interoperability is

Publish your interoperability results. For example, micro websites online are a great way for users to be able to view and interact with the data gathered. It is important to have a method to share issues - even, or especially, when it is not your product at fault.

6. Attending UPF events

The Bluetooth UnPlugFest (UPF) events, sponsored by Bluetooth SIG, represent a great opportunity to test products in groups before going to market. Think of it like speed dating for connected technology! Engineers from different companies bring the products they have in development, allowing all parties to understand how well their future products work together. Being able to test not-yet-released third-party products with your own devices before you go to market is a significant competitive advantage.

Conclusion

Keep the focus on what really matters.

Bluetooth and Wi-Fi only exist to make the user’s life easier. By providing an alternative to messy and restrictive cabling, they are one of the greatest – yet simplest – examples of customer-centricity.

Adopting a similar approach with your product will stand you in good stead. Consumers will not look at your product as a group of individual functions and technical elements. They will consider the overall product as a whole. Whether they are investing a relatively small amount, or making a major purchase such as a smartphone or even a car, the increasing prevalence of technology in our everyday lives is driving ever-higher customer expectations for all connected products.

Your interoperability testing programme should be designed to meet these expectations by testing in the same way the end user will use your product, holistically.

Only one outcome matters: does your device deliver on its promise? Does it do what the customer expects, first time, every time - and without hassle or interruption?

This should always be the starting point. It is an approach that has fuelled the growth of one of the world’s most prominent and successful companies. Apple has excelled at looking at things from a user perspective rather than a technical perspective. It has made testing a product’s overall functionality its starting point. Only if elements do not hang together do they dive deeper in the debug process.

Designing with this approach means you make products which are more intuitive, more universally applicable, more usable – and ultimately much more successful.