Case Study: Automated HID performance testing

Exploring the history of human interface devices and how OEMs use automation today to ensure HID devices are accurate over the product lifetime.

“Using HID automation testing in conjunction with the cobot to simulate human interactions we can build a highly accurate characterisation of our premium keyboard performance in a way that no human based manual testing can match.”

Product Development Engineer, Keyboard OEM, Shenzhen

Introduction

Since the invention of the personal computer the mouse and keyboard have basically remained unchanged. It’s a testament to the foresight and creativity of the engineers and designers at the time to address the needs of the end user so comprehensively.

As we have become more mobile the wires and cables connecting these products have been replaced by wireless connections, which require integrated batteries and charging systems. In many cases disposable cells are used with the consequences of the environmental impact.

Due to power consumption of the wireless technologies these products needed to be charged frequently. Now a new generation of wireless standards such as Bluetooth Low Energy are redefining the design and operation of the Human Interface Device.

Looking forward we anticipate that low energy wireless standards will significantly reduce the need for integrated batteries if not eliminate them outright. HID products will use kinetic energy generated during the operation of the device or harvest sufficient power from the environment (heat and light) to facilitate operation.

In this case study we explore what challenges are faced by the end user and how we can create innovative ways to test performance to improve the end user experience.

Operating Challenges facing Low Power HID devices

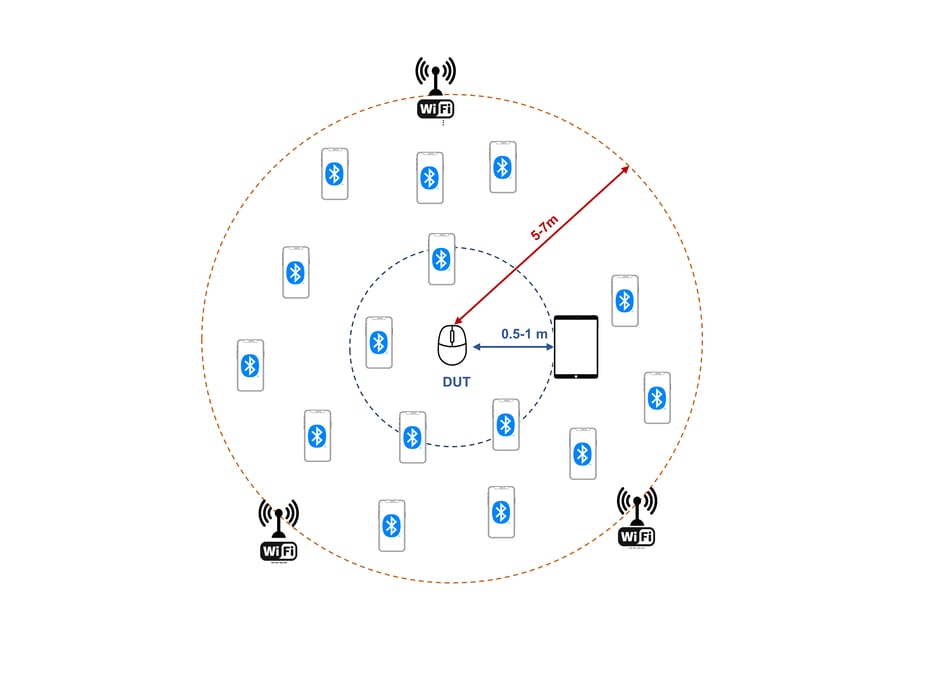

With the advent of the Internet of Things, many products within our environment are connected using wireless technologies. Consequently, it’s very important to define the challenges that will be faced by deploying low power wireless solutions. It’s inevitable that product operation will be disrupted by spectrum congestion and energy spikes from surrounding products. The key aspect is to ensure that any disruption is minimal and indiscernible to the end user.

In isolation our low power HID products will work well. This is typically the environment during certification testing where the focus in on adhering to standards and meeting RF emissions.

However, in the real world our products will be surround by other systems operating in the same/similar spectrum such as Audio transceivers, Beacons, Mesh networks and Wi-Fi access points including Personal Mobile Phones and Tablets. Quickly our environment becomes congested and the product design needs to adapt and manage the environmental wireless ‘stresses’.

Performance Testing Challenges Associated with Low Power HID devices

Low power HID products are designed to strike a balance between energy conservation and performance. If the design is biased towards maximising power consumption the wake-up time and responsiveness (latency) may suffer. Similarly optimising performance will affect the battery life.

To develop a good understanding of the latency characteristics it’s important to conduct extensive stress cycles of thousands of operations. This will provide a performance distribution to help us understand the real-world behaviours.

Performance can be equally affected by environmental RF congestion generated by other products and systems. In this case it’s important to simulate a typical RF environment containing continuous or dynamic traffic including ping events. RF attenuators can be used to manage the RF signal levels of the interferes.

Such a set up enables us to model the product performance across different RF conditions and judge relative performance. We can also use these tests to benchmark performance against competitors with similar products.

Automated performance testing for Low Power HID products

In order to develop a robust understanding of HID performance we need to conduct our testing to reflect the end user operating mode. For example, this requires that keys on a wireless keyboard are operated many thousands of times, similarly the drag and drop function on a mouse must remain stable when used in conjunction with the most popular devices and operating systems.

It’s unrealistic to perform 30,000 key press tests or 10,000 drag and drop cycles manually. We need a more consistent and repeatable tool. Also, for each test cycle we may want to record wireless air traces and correlate these with against failed test cases. The following are examples where automation was used to validate and then improve the design using automated stress testing.

Stress Testing Mouse Latency Performance

By performing 1000’s of automated drag and drop test cycles using an automation platform the test team were able to characterise the latency performance of a wireless mouse. The drag and drop tests were performed using a cobot. The drag and drop feature was tested with the mouse placed on different types of test surfaces ranging from laminated wood to quartz worktops. The design team received performance data showing the responsiveness for each drag and drop action including a video and Bluetooth analyser log.

The Result

The test data was analysed and showed approximately 5% of the drag and drop actions were outside the target range. The design was updated with new firmware to improve the latency of the drag and drop function.

Stress Testing Performance of wireless gaming keyboard

Online gaming is a very serious business for some people. The HID products used during in-game play must meet stringent performance goals to optimise the gaming experience. Responsiveness is very important for products such as keyboards to ensure synchronisation with the video imagery.

During the game play, the player will typically use 4-5 keys. Automation was used to stress test the responsiveness of the 5 most used keys with each key operated 40,000 times across varying duty cycles. The test also exercised the debounce performance of the keyboard.

Test Result

Test data showed that in some cases debounce performance was variable and further software optimisation was required.

“Using Nextgen ATAM automation means QA test teams don’t need programming skills to create complex real world hardware tests utilising a cobot to simulate real human interactions. A QA or Product Validation team can test months worth of usage in days.”

Richard Green, Automation Engineering Manager, NextgenTo find out more about Nextgen's automated testing services, get in touch to speak to one of our experts today.